3. AlexNet Model

1. Introduction to AlexNet

AlexNet is a pioneering convolutional neural network (CNN) architecture that significantly advanced the field of computer vision. Developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, AlexNet achieved a breakthrough in the 2012 ImageNet Large Scale Visual Recognition Challenge (ILSVRC) by outperforming traditional computer vision methods with a large margin. This success demonstrated the power of deep learning in image classification tasks.

2. Components of AlexNet

-

Architecture Overview

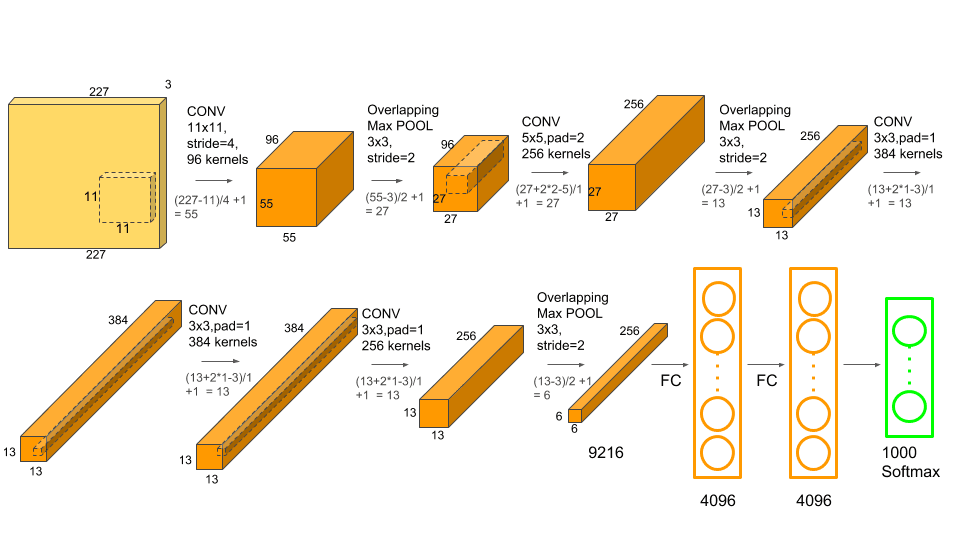

AlexNet consists of eight layers, including five convolutional layers followed by max-pooling layers, and three fully connected layers. Here’s a detailed breakdown of its components:

-

Input Layer: Accepts input images typically in RGB format.

-

Convolutional Layers: The first two convolutional layers have large filter sizes (11x11 and 5x5), aiming to capture both high-frequency and low-frequency information from the input images.

-

Activation Function: Throughout the network, the ReLU (Rectified Linear Unit) activation function is used after each convolutional and fully connected layer to introduce non-linearity:

-

Max-Pooling Layers: After convolutional layers, max-pooling layers reduce spatial dimensions, aiding in translation and rotation invariance:

-

Fully Connected Layers: The three fully connected layers at the end of the network integrate high-level features for final classification. The last layer typically uses a softmax activation function to output class probabilities.

-

-

Mathematical Operations in AlexNet

-

Convolution Operation: The convolutional layers apply filters to the input images to extract features:

where is the input image, is the filter, and are spatial coordinates. -

ReLU Activation: ReLU introduces non-linearity by replacing negative values with zero:

-

Max-Pooling: Max-pooling reduces spatial dimensions by selecting the maximum value from each local region of the feature map:

-

Fully Connected Layers: Each neuron in the fully connected layers computes a weighted sum of its inputs, followed by a bias term and activation function:

where is the weight matrix, is the input vector, and is the bias vector.

-

3. Architectural Innovations

-

Local Response Normalization (LRN):

- AlexNet used LRN to normalize the output of a single neuron across different feature maps, enhancing generalization and reducing overfitting.

-

Dropout:

- Dropout was applied to fully connected layers during training to randomly drop neurons, preventing co-adaptation and improving generalization.

-

Data Augmentation:

- Training images were augmented with transformations such as flipping, cropping, and scaling to increase the diversity of training data and improve model robustness.

4. Training and Optimization

-

Training Methodology:

- AlexNet was trained using stochastic gradient descent (SGD) with momentum. The network was initialized with random weights and trained on GPUs to expedite computation.

-

Batch Processing:

- Batch processing and parallel computation on GPUs enabled faster training of deep networks like AlexNet, which contained millions of parameters.

5. Impact and Legacy

-

Performance: AlexNet achieved a top-5 error rate of 15.3% in the ImageNet challenge, significantly outperforming previous methods and demonstrating the potential of deep learning in computer vision.

-

Architectural Influence: AlexNet’s success catalyzed the development of deeper and more complex CNN architectures, leading to breakthroughs in various computer vision tasks such as object detection, image segmentation, and facial recognition.

-

Deep Learning Revolution: The widespread adoption of deep learning in academia and industry can be traced back to AlexNet’s pivotal role in demonstrating the effectiveness of deep neural networks for real-world applications.

6. Conclusion

AlexNet represents a milestone in the history of deep learning and computer vision, marking the beginning of the deep learning revolution. Its architectural innovations, such as deep convolutional layers, ReLU activations, and efficient training methodologies, set the stage for subsequent advancements in artificial intelligence and image processing. Today, the principles and techniques pioneered by AlexNet continue to underpin state-of-the-art deep learning models, driving progress in fields ranging from autonomous driving to medical imaging.